The 2019 World Congress on Advances in Nano, Bio, Robotics and Energy (ANBRE19)

An Objectness Score for Accurate and Fast Detection during Navigation

by

Hongsun Choi,

Mincheul Kang,

Youngsun Kwon, and

Sung-Eui Yoon

Korea Advanced Institute of Science and Technology (KAIST)

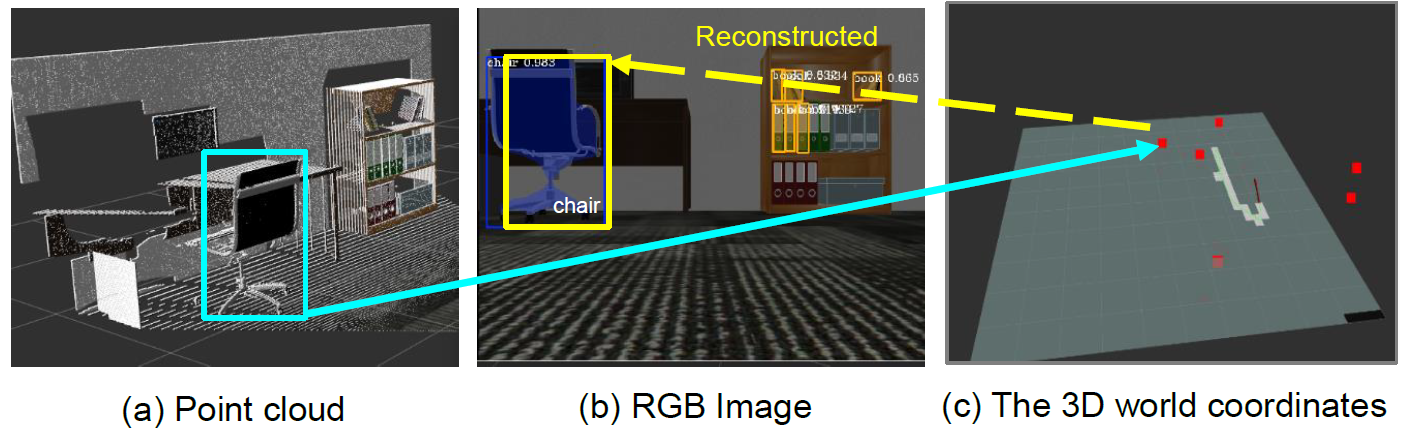

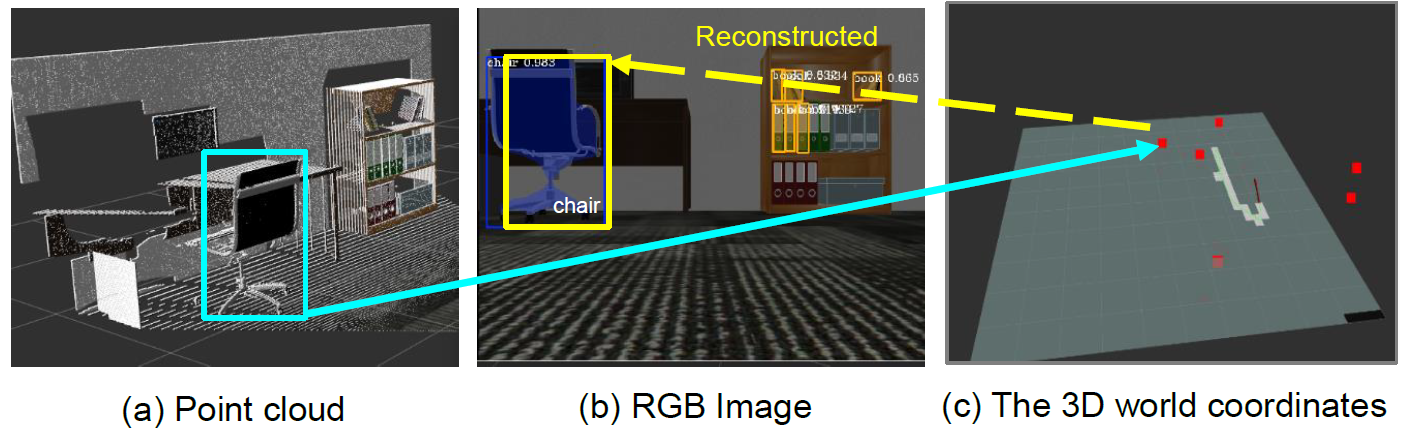

These figures show how our approach maintains the locations and classes of

detected objects in the 3D space, and reconstructs them onto the 2D image plane.

The images in (a) and (b) show a point cloud and RGB image from the RGB-D camera

mounted on a mobile robot. (c) is a reconstructed 3D map in the 3D world

coordinates. When the robot recognizes a chair during navigation, we obtain the

point cloud set in the 2D bounding box region of the chair (the box in (a)) and

convert the point cloud of the chair to a 3D location (the red point in (c)).

This 3D information contains the 2D bounding box location, class probability,

mean depth, etc. When the chair is observed in the field of view again, we then

project the 3D location of the previously detected object onto the RGB image

plane (the yellow box in (b)) using affine projection. Based on this information,

we can compare which class is more accurate between the reconstructed 2D bounding

box (the yellow box in (b)) and the currently detected 2D bounding box (the blue

box in (b)).

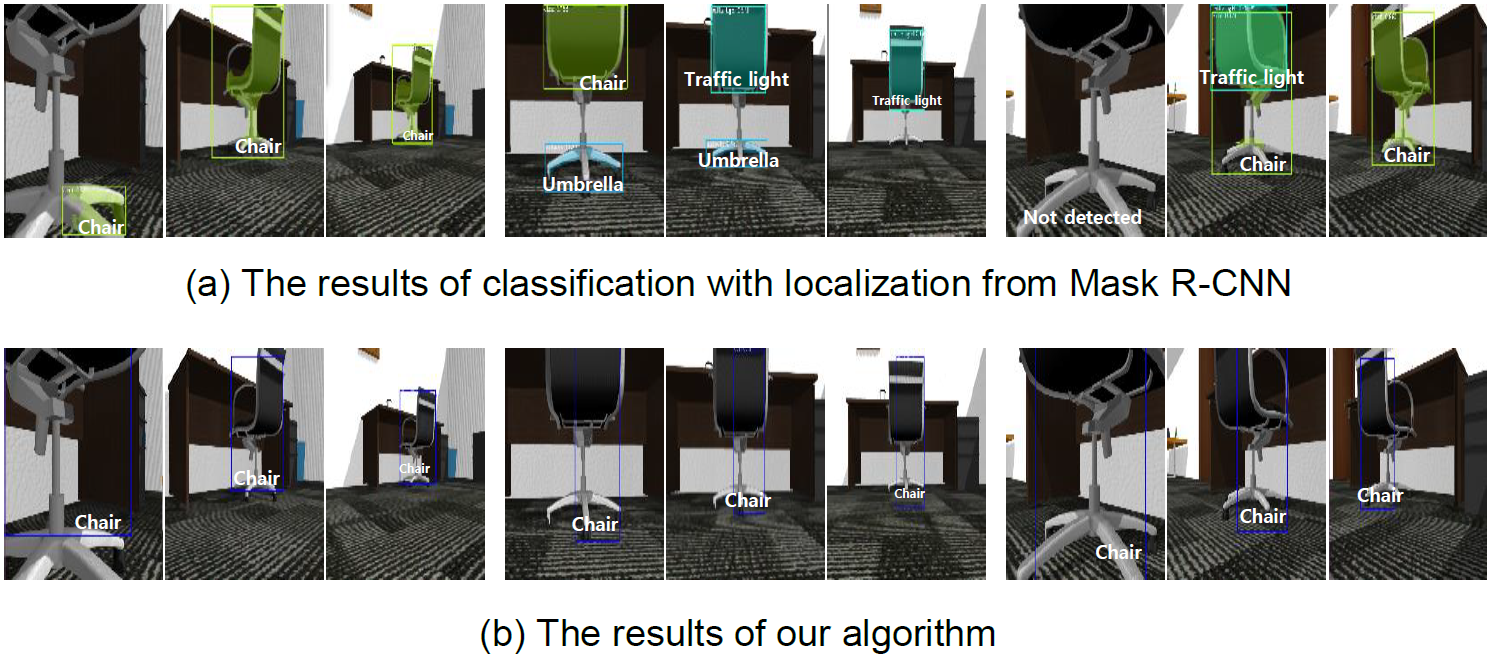

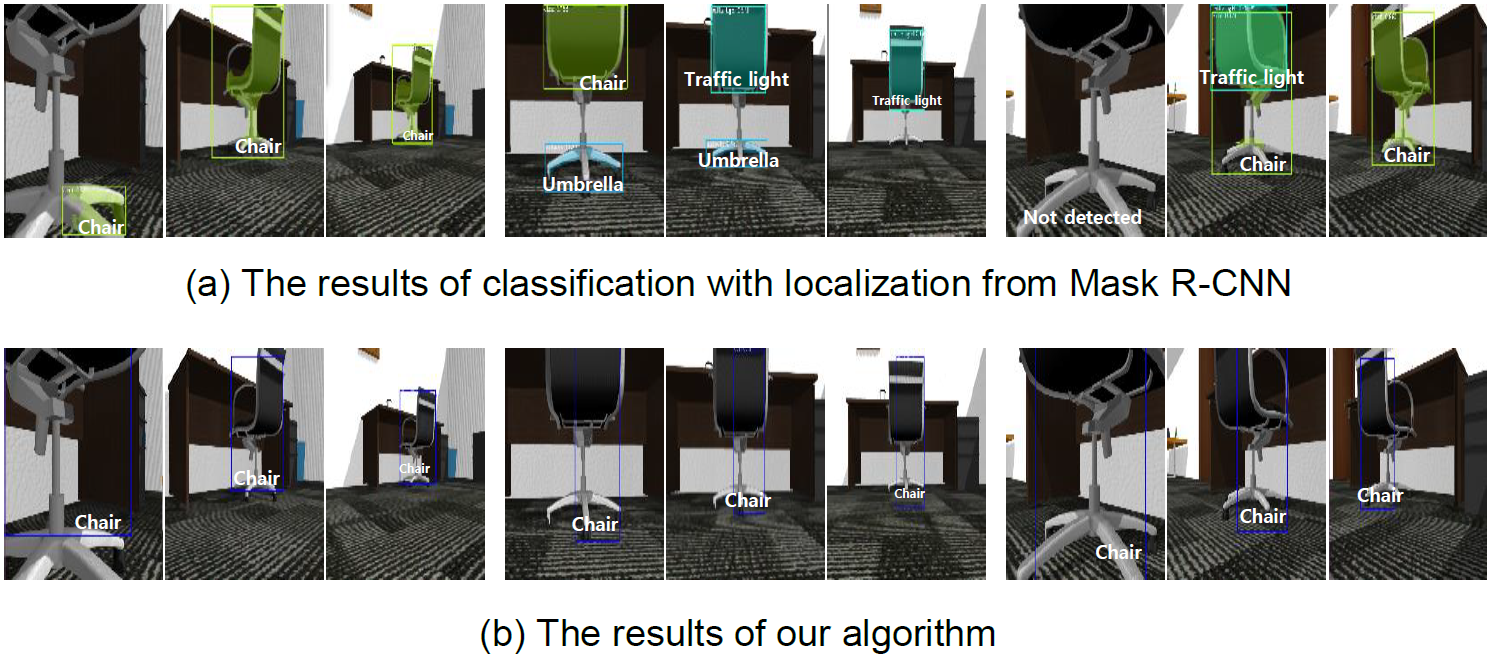

These image sequences show the results of classification with localization

during navigation of a mobile robot. Our method robustly identifies objects

during navigation.

Abstract

We propose a novel method utilizing an objectness score for maintaining

the locations and classes of objects detected from Mask R-CNN during mobile

robot navigation. The objectness score is defined to measure how well the

detector identifies the locations and classes of objects during navigation.

Specifically, it is designed to increase when there is sufficient distance

between a detected object and the camera. During the navigation process, we

transform the locations of objects in 3D world coordinates into 2D image

coordinates through an affine projection and decide whether to retain the

classes of detected objects using the objectness score. We conducted experiments

to determine how well the locations and classes of detected objects are

maintained at various angles and positions. Experimental results showed that

our approach is efficient and robust, regardless of changing angles and distances.

Contents

Paper: PDF (0.7MB)

|

|